OPENLINEAGE: UNVEILING DATA LINEAGE FOR MODERN DATA ECOSYSTEMS

Understanding the journey of data from its source to its final destination is crucial for businesses and organizations. This journey, known as data lineage, has become increasingly complex with the proliferation of data sources, transformation processes, and analytical tools. Enter OpenLineage, an open-source standard that aims to simplify and standardize data lineage tracking across diverse data ecosystems.

What is data lineage?

Data lineage is the process of tracing the journey of data from its origin to its destination, tracking every transformation, processing step, and the tools or systems it interacts with along the way. With data flowing through increasingly complex architectures, the ability to accurately map and understand these movements is vital for ensuring data quality, compliance, and operational efficiency.

However, tracking data lineage is no small feat, especially with the explosion of data sources, analytics platforms, and transformation tools that make up modern data stacks.

What is OpenLineage?

OpenLineage is an open standard for data lineage collection and analysis. Initiated by Datakin and now part of the Linux Foundation, OpenLineage provides a set of standardized definitions and APIs that allow different tools and platforms in the data ecosystem to share lineage metadata in a consistent format.

The primary goal of OpenLineage is to create a unified approach to collecting and utilizing data lineage information. By establishing a common language for data lineage, OpenLineage enables better interoperability between various data tools, platforms, and processes.

Key Components of OpenLineage

- OpenLineage Specification: This defines the core concepts and data model for representing lineage metadata. It includes definitions for jobs, datasets, runs, and the relationships between them.

- Integration Libraries: OpenLineage provides libraries and SDKs for popular data processing frameworks like Apache Spark, Apache Airflow, and dbt. These integrations allow developers to easily instrument their data pipelines to emit lineage events.

- API: The OpenLineage API defines how lineage events should be structured and transmitted. This standardization ensures that all tools speaking the OpenLineage language can understand and process lineage data consistently.

- Facets: These are extensible metadata attributes that can be attached to core OpenLineage entities, allowing for custom metadata to be included in lineage information.

Why should I care about this?

| Standardization and Interoperability | One of the most significant advantages of OpenLineage is its ability to standardize lineage data across different tools and platforms. This standardization enables seamless integration between various components of a data stack, from data ingestion tools to transformation engines and analytics platforms. As a result, organizations can build a comprehensive view of their data lineage without being locked into a single vendor or tool. |

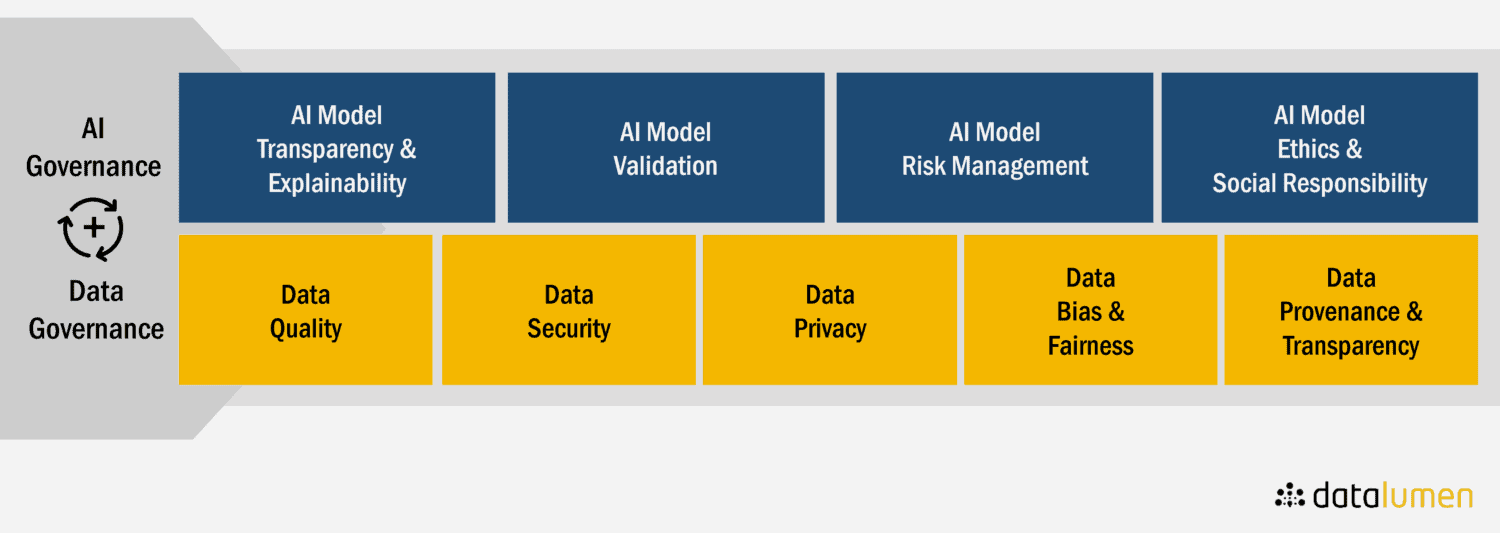

| Enhanced Data Governance and Compliance | With the increasing importance of data privacy regulations like GDPR and the AI Act, understanding data lineage is crucial for compliance. OpenLineage makes it easier to track the flow of sensitive data across systems, helping organizations ensure that data is handled in accordance with regulatory requirements. This comprehensive lineage information also aids in auditing processes and demonstrating compliance to regulatory bodies. |

| Improved Trust | By providing visibility into the entire data pipeline, OpenLineage helps data teams identify and resolve data quality issues more efficiently. When inconsistencies or errors are discovered, teams can quickly trace the problem back to its source, understanding all the transformations and processes the data has undergone. This transparency builds trust in the data and the insights derived from it. |

| Efficient Troubleshooting and Debugging | When issues arise in data pipelines or analytics, OpenLineage’s detailed lineage information becomes invaluable. Data engineers and analysts can trace the path of data through various systems, identifying where problems may have occurred. This capability significantly reduces the time and effort required for troubleshooting, leading to faster resolution of data-related issues. |

| Support for Data Cataloging and Metadata Management | OpenLineage integrates seamlessly with data catalogs and metadata management tools. By providing rich lineage information, it enhances the capabilities of these tools, allowing for more comprehensive documentation of data assets. This integration supports better data discovery, understanding, and utilization across the organization. |

Conclusion

OpenLineage represents a significant step forward in the field of data lineage and metadata management. By providing a standardized, open-source approach to tracking data lineage, it addresses many of the challenges faced by modern data-driven organizations. From improving data governance and quality to enhancing troubleshooting capabilities and fostering collaboration, OpenLineage offers a wide range of benefits.

As data ecosystems continue to grow in complexity, tools like OpenLineage will become increasingly crucial. Organizations that adopt OpenLineage can expect to gain a competitive edge through better data management, increased efficiency, and improved data-driven decision-making capabilities.

The open nature of the project ensures that it will continue to evolve and improve, driven by the needs of the data community. As more tools and platforms adopt the OpenLineage standard, we can expect to see even greater interoperability and capabilities in the future of data lineage tracking.