THE VITAL ROLE OF DATA SHARING AGREEMENTS AND CONTRACTS IN ENSURING SAFE & RESPONSIBLE DATA EXCHANGE

What Are Data Sharing Agreements & Contracts?

Data sharing agreements and contracts are essentially documents that outline the terms and conditions of sharing data between two or more parties. These agreements are important to ensure that data is shared in a safe and responsible manner, and that all parties involved understand their rights and obligations.

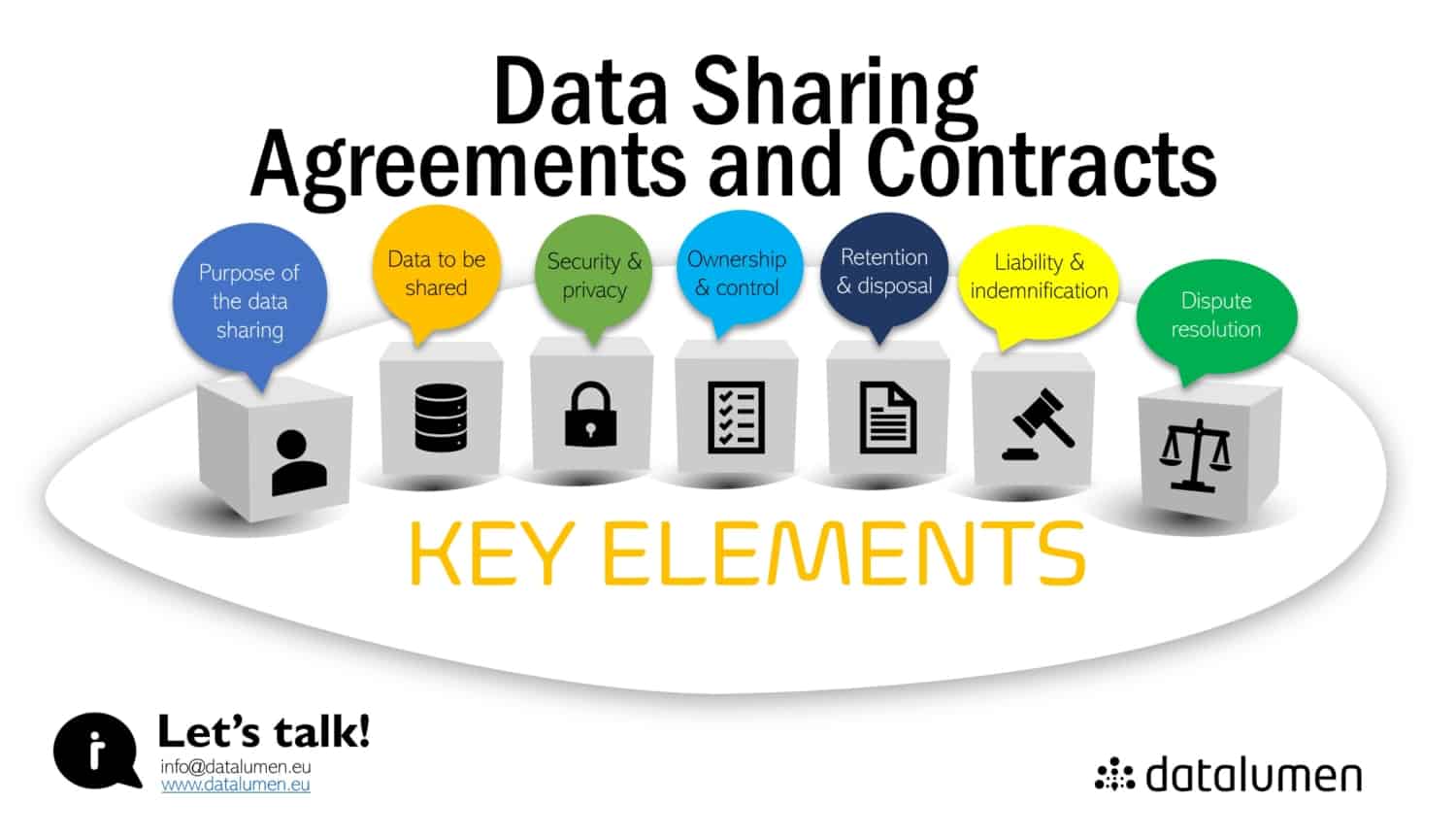

Key Elements

Data sharing agreements typically include the following elements:

- Purpose of data sharing: The reason why the data is being shared and how it will be used.

- Data to be shared: The type of data that will be shared, including any restrictions or limitations.

- Data security and privacy: The measures that will be taken to protect the data and ensure its privacy.

- Data ownership and control: The ownership and control of the data, including any intellectual property rights.

- Data retention and disposal: The length of time that the data will be retained and how it will be disposed of.

- Liability and indemnification: The responsibilities and liabilities of each party involved in the data sharing, and any indemnification clauses.

- Dispute resolution: The process for resolving any disputes that may arise during the data sharing process.

To Conclude

Data sharing agreements and contracts are important to ensure that data is shared in a responsible and safe manner, and that all parties involved understand their rights and obligations. They help to establish trust and transparency between parties, and can help to prevent legal and financial consequences that may arise from data breaches or misuse.