Customer & household profiling, personalization, journey analysis, segmentation, funnel analytics, acquisition & conversion metrics, predictive analytics & forecasting, … The marketing goal to deliver a trustworthy and complete insight in the customer across different channels can be quiet difficult to accomplish.

A substantial amount of marketing departments have chosen to rely on a mix of platforms going from CEM/

CXM,

CDP,

CRM, eCommerce, Customer Service,

Contact Center,

Marketing Automation to

Marketing Analytics. A lot of these platforms are best of breed and come from a diverse number of vendors who are leader in their specific market segment. Internal custom build solutions (Microsoft Excel, homebrew data environments, …) always complete this type of setup.

78% According to a Forrester study, although 78% of marketers claim that a data-driven marketing strategy is crucial, as many as 70% of them admit they have poor quality and inconsistent data. |

The challenges

Creating a 360° customer view across this diverse landscape is not a walk in the park. All of these marketing platforms do provide added value but are basically separate silos. All of these environments use different data and the data that they have in common, is typically used in a different way. If you need to join all these pieces together, you need some magical super glue. Reality is that none of the marketing platform vendors actually have this in house.

Another point of attention is your data scope. We don’t need to explain you that customer experience is the hot thing in marketing nowadays. However marketeers need to do much more than just analyze customer experience data in order to create real customer insight.

Creating insight also requires that the data that you analyze goes beyond the traditional customer data domain. Combining customer data with i.e. the proper product/service, supplier, financial, … data is rather fundamental for this type of exercises. This type of extended data domains is usually lacking or the required detail level is not present in one particular platform.

38% Recent research from KPMG and Forrester Consulting shows that 38% of marketers claimed they have a high level of confidence in their data and analytics that drives their customer insights. That’s said, only a third of them seem to trust the analytics they generate from their business operations. |

The foundations

Regardless of the mix of marketing platforms, many marketing leaders don’t succeed in taking full advantage of all their data. As a logical result they also fail to make a real impact with their data driven marketing initiatives. The underlying reason for this issue is that many marketing organizations lack a number of crucial data management building blocks that allow them to break out of these typical martech silos. The most important data capabilities that you should take into account are:

Capability | Description |

Master Data Management (aka MDM) | Creating a single view or so called golden record is the essence of Master Data Management. This allows you to make sure that a customer, product, etc is consistent across different applications. |

Business Glossary | Having the correct terms & definitions might seem trivial but reality is that in the majority of the organizations noise on the line is reality. However having crystal clear terms and definitions is a basic requirement to have all stakeholders manage the data in the same way and prevent conflicts and waste down the data supply chain. |

Data Catalog | Imagine Google-like functionality to search through your data assets. Find out what data you have, what’s the origin, how and where it is being used. |

Data Quality | The why of proper data quality is obvious for any data consuming organization. If you have disconnected data landscape, data quality is even more important because it also facilitates the automatic match & merge glue exercise that you put in place to come to a common view on your data assets. |

Data Virtualization | Getting real-time access to your data in an ad hoc and dynamic way is one of the missing pieces to get to your 360° view in time and budget. Forgot about traditional consumer headaches such as long waiting times, misunderstood requests, lack of agility, etc. |

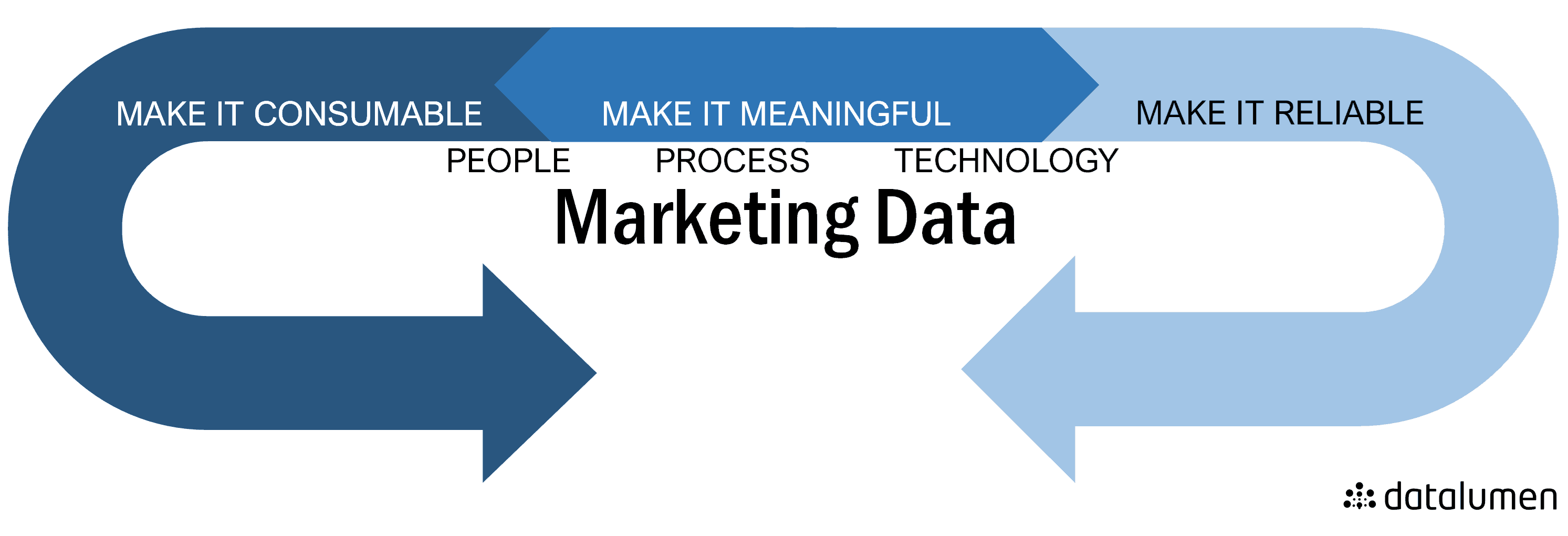

We intentionally use the term capability because this isn’t a IT story. All of these capabilities have a people, process and technology aspect and all of them should be driven by the business stakeholders. IT and technology is facilitating.

The results

If you manage to put in place the described data management capabilities you basically get in control. Your organization can find, understand and make data useful. You improve the efficiency of your people and processes, and reduce your data compliance risks. The benefits in a nutshell:

- Get full visibility of your data landscape by making data available and easily accessible across your organization. Deliver trusted data with documented definitions and certified data assets, so users feel confident using the data. Take back control using an approach that delivers everything you need to ensure data is accurate, consistent, complete and discoverable.

- Increase efficiency of your people and processes. Improve data transparency by establishing one enterprise-wide repository of assets, so every user can easily understand and discover data relevant to them. Increase efficiency using workflows to automate processes, helping improve collaboration and speed of task completion. Quickly understand your data’s history with automated business and technical lineage that help you clearly see how data transforms and flows from system to system and source to report.

- Reduce data and compliance risks. Mitigate compliance risk setting up data policies to control data retention and usage that can be applied across the organization, helping you meet your data compliance requirements. Reduce data risk by building and maintaining a business glossary of approved terms and definitions, helping ensure clarity and consistency of data assets for all users.

Conclusion

The data you need to be successful with your marketing efforts is there. You just have to transform it into useable data so that you can get accurate insights to make better decisions. The key in all of this is getting rid of your marketing platform silos by making sure that you have the proper data foundations in place. The data foundations to speed up and extend the capabilities of your datadriven marketing initiatives.